Why linking experience quality to business KPIs is “enterprise level”

Low-cost survey tools usually stop at collecting opinions and displaying simple charts. That may create a sense of control, but it is not management. The enterprise level begins when you can answer the question: does improving customer experience quality actually impact business results?

This is what separates “we run surveys because we should” from real decision-making, budgeting, and prioritization.

Which business KPIs are most often linked to experience quality?

Not every business metric makes sense in a CX context. It is best to start with KPIs that logically stem from customer experience.

Examples of KPIs that often react to quality:

- retention (returns, repeat purchases),

- churn (customer loss),

- revenue per customer (ARPU / AOV, depending on the model),

- number of complaints and returns,

- cost of service (support time, number of tickets),

- conversion at key stages (e.g. payment, onboarding).

The key is to choose KPIs for which experience quality has a credible path of influence.

Why correlation may not be visible immediately

Even if experience quality truly affects KPIs, it does not have to show up instantly on a weekly chart.

The most common reasons:

- delayed effect – customers decide to return after some time,

- indirect impact – quality reduces problems, which then affects costs and churn,

- noise and seasonality – campaigns, holidays, weather, or pricing can mask quality effects,

- different segment reactions – improvements may apply only to part of the customer base.

A mature approach is therefore not “is there a correlation today?”, but: do we see a repeatable relationship over time, within segments and touchpoints?

From opinions to impact: you need shared data context

If you keep:

- feedback and ratings in one place,

- and business KPIs in spreadsheets, CRM, or POS systems,

you usually end up with intuition instead of evidence.

To talk seriously about impact, you need:

- a shared time frame (comparable periods),

- segments (e.g. location, channel, touchpoint),

- reference points (a baseline before changes),

- trend analysis rather than single data points.

This is why feedback without business data is only a half-finished product.

What does importing KPIs into a CX system enable?

Importing business data into a CX tool is not a “nice-to-have”. It is the foundation for moving from opinions to management.

With KPIs inside the system, you can:

- see whether drops in quality precede changes in churn or complaints,

- compare locations not only by ratings, but by actual business impact,

- measure the effect of corrective actions (before/after) on KPIs,

- build budget arguments: “this improvement reduces service costs”,

- avoid cosmetic actions that improve scores but do not change outcomes.

This is the moment when CX becomes part of company management – not just a marketing initiative.

How to approach this practically (without magic or false promises)

A simple, safe implementation approach:

- choose 1–2 KPIs with the strongest logic of influence (e.g. complaints and churn),

- anchor measurement to specific touchpoints in the Customer Journey,

- define CX drivers to understand what you are improving,

- segment results by time, place, and channel,

- observe trends over time and look for delayed effects (weeks, months, quarters).

Do not promise 1:1 correlations. Promise better decision quality.

Linking experience quality with KPIs in Data Responder

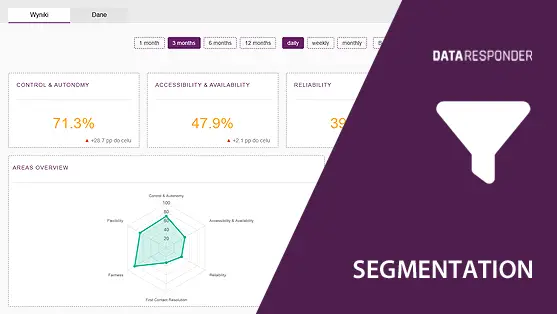

Data Responder supports this approach by bringing together, in one place:

- operational feedback (forms, QR codes, terminals),

- context (touchpoints and Customer Journey),

- CX drivers (the interpretation layer),

- segmentation (time, location, channel),

- and reference business KPIs (via import or manual entry).

This allows you to analyze experience quality and business KPIs side by side, using the same dimensions – without manual spreadsheet stitching.

Conclusions

Linking experience quality with business KPIs is the most difficult part of CX – but also the one that most clearly distinguishes mature programs from “surveys for the sake of it”.

For this to work:

- do not expect immediate 1:1 correlations,

- focus on trends and possible delayed effects,

- analyze results by segments and touchpoints,

- import KPIs to base decisions on data, not intuition.

When experience quality and business KPIs meet within one analytical model, feedback stops being “opinion” and becomes a management tool.